An LLM sees prompts as a sequence of tokens where totally different models (or versions of a model) can tokenize the same prompt in different methods. Since LLMs are trained on tokens (and not on raw text), the way prompts get tokenized has a direct influence on the quality of the generated response. «Prompts» now turn out to be the first programming interface for generative AI apps, telling the models what to do and influencing the standard of returned responses. «Prompt Engineering» is a fast-growing field of research that focuses on the design and optimization of prompts to deliver consistent and quality responses at scale. Generative AI is able to creating new content material (e.g., textual content, photographs, audio, code and so on.) in response to user requests.

But that’s simply the start—AI prompts may help you automate your workflow process, boost efficiency, and do far more. ClickUp’s highly effective organizational features, like tags, customized views, and relationships may help categorize prompts based on their purpose, project, or AI model getting used. This makes discovering the right prompt easier when needed, saving time and effort. PromptBase’s no-code AI app builder helps you create easy AI apps with tailored prompts. If you’re on the lookout for an enormous assortment of prompts available for exploration, try PromptBase.

Prompt Engineering With Generative Ai Instruments

Now that we know how prompts can be constructed, we can start serious about the means to design them to mirror finest practices. We can take into consideration this in two parts – having the right mindset and making use of the right strategies. Enter the above immediate into the Azure OpenAI Studio Chat Playground with the default settings.

- The finest method to enhance our instinct for it is to apply more and undertake a trial-and-error strategy that combines application area expertise with recommended strategies and model-specific optimizations.

- Dive in free of charge with a 10-day trial of the O’Reilly learning platform—then discover all the other sources our members depend on to build skills and remedy problems every single day.

- We can take into consideration this in two components – having the best mindset and applying the right techniques.

- Professionals are applying this know-how to streamline content creation, encourage new concepts, conduct research instantaneously, automate time-consuming processes, and far more.

- It supports natural language prompts for LLMs like GPT-3 and offers real-time feedback as you build your prompt, serving to you refine your method and obtain optimum outcomes.

If you need automated and intricate workflows, PromptChainer is value exploring. It excels at constructing advanced prompt chains with conditional logic for advanced knowledge sets, allowing prompts to adapt primarily based on the AI’s response. Do you ever surprise how typically generative AI instruments are able to turn out precisely what you want but present insufficient search results for sure queries?

Advanced Immediate

This paradigm shift introduces machines able to producing human-like outputs, ranging from textual content to images and past. However, reaching such capabilities requires robust engineering approaches, with immediate engineering emerging as a pivotal approach. Prompt analysis is a feature that allows customers to quickly assess the effectiveness of their prompts or inputs for a specific task while also refining these prompts through an iterative process based on such feedback. Typically, that is made potential by way of parameter testing, immediate version comparisons, automated immediate recommendations, and other useful tools. This function is very crucial for developers constructing LLM-powered AI purposes.

It’s great for busy SaaS founders and entrepreneurs who need assistance interacting with ChatGPT and want some steering through instance prompts created by an professional in how ChatGPT works. With quite a few topical and activity-based search filters, users can easily discover prompts for varied duties, whether that’s creating copy for a LinkedIn ad or writing Midjourney prompts. You also can save your favourite prompts to a curated record, where different members of your group can entry them, and even build new customized prompts with its immediate variables. Helicone.ai is an open-source platform for developing machine-learning model prompts. It can improve the performance of LLMs by collecting knowledge, monitoring their performance, and experimenting with numerous immediate templates.

EWeek has the latest technology information and evaluation, buying guides, and product evaluations for IT professionals and expertise buyers. The site’s focus is on innovative solutions and overlaying in-depth technical content material. EWeek stays on the slicing fringe of expertise information and IT trends by way of interviews and expert evaluation. Gain perception from high innovators and thought leaders within the fields of IT, enterprise, enterprise software, startups, and extra.

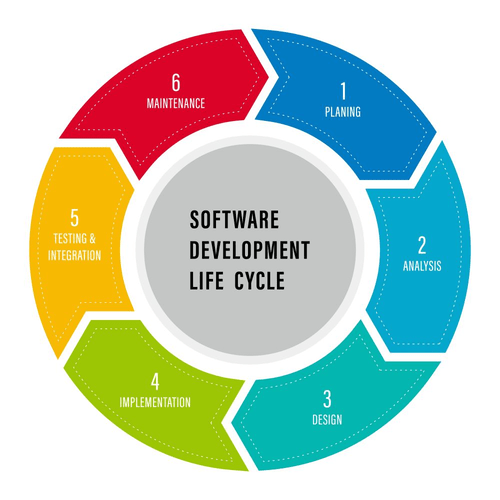

Check out our article on the top AI companies, the place you possibly can discover extra instruments for rising the productivity of your small business. Despite the excitement surrounding it, the prominence of immediate engineering may be fleeting. A more enduring and adaptable skill will keep enabling us to harness the potential of generative AI? It is called downside formulation — the flexibility to establish, analyze, and delineate problems. Easily deploy and embed AI throughout your business, handle all data sources, and speed up responsible AI workflows—all on one platform. Generative AI is revolutionizing software development—by enabling unstructured and open-ended immediate responses, API integration, and collaboration alternatives.

Download Courses And Learn On The Go

These strategies only scratch the surface of what skilled prompt engineers can accomplish. The request is now in the kind beneath, where the tokenization successfully captures relevant info from context and conversation. Now, altering the system context can be as impactful on the quality of completions, as the user inputs offered.

It achieves this using Large Language Models like OpenAI’s GPT («Generative Pre-trained Transformer») sequence which may be trained for utilizing natural language and code. These are the questions we’ll attempt to answer with on this chapter and the following. Agenta is an open-source end-to-end LLM development platform, obtainable https://www.globalcloudteam.com/what-is-prompt-engineering/ at no cost on GitHub, that particularly helps builders construct, take a look at, and deploy LLMs and LLM-powered functions. Researchers and practitioners leverage generative AI to simulate cyberattacks and design higher protection methods.

Prompt libraries are repositories of prompts organized into numerous classes, corresponding to enterprise, fundraising, or fantasy photographs. A high quality immediate engineering device may also have a sophisticated search engine to filter via the library and simply find the right prompts in your particular needs. Generative synthetic intelligence (AI) systems are designed to generate specific outputs based mostly on the standard of offered prompts. Prompt engineering helps generative AI models higher comprehend and respond to a wide range of queries, from the easy to the extremely technical. Additionally, its collaboration tools and interpretability features enhance teamwork and supply insights into why prompts generate specific outputs.

The underlying data science preparations, transformer architectures and machine learning algorithms allow these fashions to understand language after which use large datasets to create textual content or image outputs. Text-to-image generative AI like DALL-E and Midjourney uses an LLM in concert with steady diffusion, a mannequin that excels at producing photographs from textual content descriptions. Effective prompt engineering combines technical data with a deep understanding of natural language, vocabulary and context to supply optimal outputs with few revisions. The main good thing about immediate engineering is the power to attain optimized outputs with minimal post-generation effort. Generative AI outputs can be blended in high quality, usually requiring skilled practitioners to evaluate and revise. By crafting exact prompts, prompt engineers be positive that AI-generated output aligns with the desired objectives and standards, lowering the need for in depth post-processing.

Mastering Prompt Engineering: How To Get Probably The Most Out Of Generative Ai

The best device will suit your price range, work with your existing methods, and give you the capabilities you should improve productivity and decision-making when working with generative AI instruments. SaaS Prompts is a prompt library that contains 500+ ready-made ChatGPT immediate concepts that’ll help SaaS firms develop their enterprise. There are prompts for numerous topics that matter to SaaS founders, from fundraising and social media to product launches, and you’ll filter via them simply on the web site. If you need to brush up on your prompt engineering abilities, there a tons of on-line programs on the market, both free and paid. Using our learning expertise platform, Percipio, your learners can have interaction in customized studying paths that may feature curated content from all sources. Note that there are no right and wrong answers here – just exploring options by trial-and-error and constructing intuition for what works for a given model and utility domain.

Large Language Models (LLM) depend on neural networks, introducing randomness. Meet ClickUp, an all-in-one project management platform with powerful AI options. Let’s see how you can prompt ClickUp’s AI features to supercharge your work. You get entry to a customizable library that helps superior fine-tuning and integration with popular frameworks like PyTorch and TensorFlow.

OpenPrompt is a immediate engineering tool for creating efficient prompts for ChatGPT and Midjourney. It also helps prompt era for Python code, Refactor Code, TypeScript, C++, and JavaScript, making it perfect for software builders. PromptAppGPT is a user-friendly, immediate engineering platform that simplifies immediate creation with a drag-and-drop interface.

By fine-tuning efficient prompts, engineers can significantly optimize the standard and relevance of outputs to solve for both the particular and the final. This course of reduces the need for manual evaluate and post-generation enhancing, in the end saving time and effort in reaching the specified outcomes. The sculptor’s skill lies not just in the instruments, but of their vision and the way they shape the uncooked materials. Similarly, prompt engineering is the art of crafting instructions that guide a Gen AI model towards the desired consequence. Prompt engineering entails crafting precise directions or queries that guide AI fashions to generate desired outputs. Whether it’s producing artistic content, fixing advanced problems, or understanding pure language, immediate engineering serves as a bridge between human intent and machine execution.

Generative AI models are built on transformer architectures, which allow them to grasp the intricacies of language and process huge quantities of knowledge through neural networks. AI prompt engineering helps mold the model’s output, ensuring the bogus intelligence responds meaningfully and coherently. Several prompting strategies ensure AI fashions generate useful responses, including tokenization, model parameter tuning and top-k sampling. Prompt engineering is proving important for unleashing the total potential of the muse fashions that power generative AI. Foundation fashions are large language fashions (LLMs) constructed on transformer structure and filled with all the data the generative AI system needs. Generative AI fashions function based on natural language processing (NLP) and use pure language inputs to supply advanced results.

Discover Enterprise Topics

Note that the «Advanced Techniques» section in this guide refers to content material lined in the next chapter of this curriculum. This may also allow you to rapidly discover these prompts when you need them, helping your corporation increase productiveness when interacting with LLMs and other generative AI instruments. Typically, the leading prompting instruments supply searchable immediate libraries where you possibly can pay for – or freely entry – high-quality, pre-made prompts. They also offer immediate group performance, immediate evaluation tools, and loads of inspiration that will help you write your personal prompts and prompt templates. Train, validate, tune and deploy generative AI, basis fashions and machine studying capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders.

Once a immediate is tokenized, the primary operate of the «Base LLM» (or Foundation model) is to predict the token in that sequence. Since LLMs are skilled on large textual content datasets, they have a great sense of the statistical relationships between tokens and can make that prediction with some confidence. Note that they do not understand the meaning of the words within the immediate or token; they just see a pattern they’ll «complete» with their next prediction. They can continue predicting the sequence until terminated by person intervention or some pre-established situation.